The scudo allocator is a modern memory allocator designed with a strong focus on security. The name, derived from the Latin word for "shield," reflects its main purpose of protecting software applications against vulnerabilities related to memory issues. Scudo offers improved memory safety guarantees and helps mitigate the risks associated with memory-based attacks—proving to be invaluable in cybersecurity for law enforcement.

However, compared to older allocators, scudo has received limited public vulnerability research and exploration. In this series of blog posts, our goal is to bridge this gap by providing an in-depth analysis of scudo‘s internal workings, examining strategies for exploit development, and introducing helpful tools that aid in this process.

The first post is an in depth practical look at the allocator, with an introduction to the source code. It is code-centric but tries to abstract where possible.

Terminology

The terminology can vary between allocators, so to avoid confusion, here are a basic few terms we will explore later in full:

- Chunk: The space of memory returned to the user from a malloc call.

- Block: A fundamental unit of memory in

scudo, comprising chunk metadata and a user data. This user data space is what the pointer from amalloccall references. - Region: A contiguous memory area that stores blocks of a specific size.

- Cache: Common in many allocators, caches enhance memory access speed and data locality for faster allocations.

- Guard Pages: Sequential memory pages set without permissions, triggering a segmentation fault upon any access attempt (read/write).

- Primary Allocator: The mechanism for managing smaller memory allocation requests.

- Secondary Allocator: The mechanism for handling larger memory allocation requests.

- Transfer Batch: A group of free chunks assembled for efficient movement between the cache and the primary allocator.

- Quarantine: A mechanism of protecting blocks from immediate use-after-free.

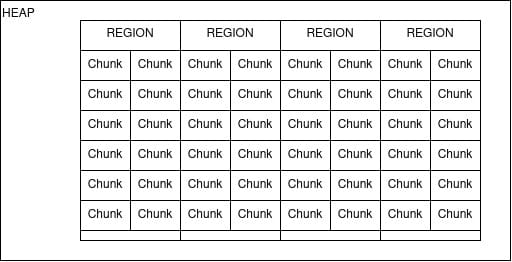

Here is an abstract simplification of the structure to keep in mind when reading on:

scudo basics

The scudo allocator has some features that distinguish it from other allocators, among them are:

- Limited metadata on the heap, with only chunk headers present.

- Region isolation using guard pages to separate memory regions from each other.

- Heap randomization, shuffling blocks within each region.

- Integrity checks integrated into each block’s header.

- Delayed freelist to prevent immediate chunk reuse and avoid use-after-free vulnerabilities (disabled and not covered here).

It is important to note that scudo is heavily customisable, and many of these features can be tweaked or disabled in specific configurations. Therefore the specifics of this deep dive can vary between processes and devices. I will also only focus on the 64-bit version of scudo, to keep things simpler and more relevant.

scudo consists of two allocator strategies: the Primary Allocator and the Secondary Allocator. The Primary Allocator handles smaller allocations up to 64KB, while the Secondary Allocator handles larger ones in a similar fashion to known allocators (eg. dlmalloc) making use of the mmap syscall.

Primary

The Primary allocator in scudo is responsible for servicing smaller allocation sizes. It carves reserved memory regions into blocks of the same size and manages these. There are two Primary allocators implemented, one for 32-bit architectures and another for 64-bit architectures. The Primary allocator for 64-bit architectures will be the primary focus of this blogpost.

Secondary

The Secondary allocator in scudo is responsible for servicing large allocation requests (everything above 0x10010), it uses mmap under the hood. The Secondary allocator will not be the focus of this blog post because finding primitives in large allocations is comparatively rare to smaller size objects. A blogpost exploring the secondary allocator will be released in the future. It is worth noting that the security features of the secondary allocator are more lax than the primary, and it should not be discounted as a vector of exploitation.

The scudo Source

The scudo allocator is officially maintained by the LLVM-project. The scudo source is C++, and it is not exactly a page-turner. Compared to the rather straightforward internals of the glibc ptmalloc2 implementation, scudo is riddled with abstractions and C++ features. Here is the wrapper for malloc in the source:

INTERFACE WEAK void *SCUDO_PREFIX(malloc)(size_t size) {

return scudo::setErrnoOnNull(SCUDO_ALLOCATOR.allocate(

size, scudo::Chunk::Origin::Malloc, SCUDO_MALLOC_ALIGNMENT));

}This code is pretty dense, but mostly self explanatory.

- The

mallocwrapper function serves as an interface to thescudoallocator, providing a layer of abstraction for memory allocation operations. - The

sizeparameter represents the size of the memory chunk to be allocated. - The

SCUDO_ALLOCATORrefers to the instance of thescudoallocator responsible for managing memory allocations. scudo::Chunk::Origin::Mallocdenotes that the memory allocation originates from amalloccall.SCUDO_MALLOC_ALIGNMENTspecifies the alignment requirements for the allocated memory.

If you are reading the scudo source it is worth keeping a few things in mind:

- Most of the

scudocode is present in header files. scudomakes heavy use of C++ features (classes, inheritance, …).- The source is easy to compile, putting in e.g. informative print statements can be a help when playing around with it.

Building and using the allocator on desktop is as simple as:

cd $LLVM/compiler-rt/lib

clang++ -fPIC -std=c++17 -msse4.2 -O2 -pthread -shared \

-I scudo/standalone/include \

scudo/standalone/*.cpp \

-o $HOME/libscudo.so

ss

# Then preload the lib

LD_PRELOAD=$HOME/libscudo.so ./a.outHere is an overview of some of the key files:

- local_cache.h – implements the local cache, this is where control will be early in an allocation path.

- primaryXX.h – contains the primary allocator implementations (32/64), this is where you will find the region initialization code.

- chunk.h – chunk header layout, checksum computation.

- combined.h – commonalities in 64/32 here, handles MTE, and dispatches requests to the local cache.

Initialization

Like most allocators, initialization is done when a program makes its first request for memory. This is done to avoid unnecessary overhead for programs not using the heap, as well as removing the need for modifying the initialization routines of the linker.

For 64-bit instances the initialization routine is implemented in primary64.h.

// primary64.h

void init(s32 ReleaseToOsInterval) NO_THREAD_SAFETY_ANALYSIS {

DCHECK(isAligned(reinterpret_cast<uptr>(this), alignof(ThisT)));

const uptr PageSize = getPageSizeCached();

const uptr GroupSize = (1U << GroupSizeLog);

const uptr PagesInGroup = GroupSize / PageSize;

const uptr MinSizeClass = getSizeByClassId(1);

// ...

// Reserve the space required for the Primary.

// static const uptr PrimarySize = RegionSize * NumClasses;

CHECK(ReservedMemory.create(/*Addr=*/0U, PrimarySize,

"scudo:primary_reserve"));

// ...

for (uptr I = 0; I < NumClasses; I++) {

RegionInfo *Region = getRegionInfo(I);

// The actual start of a region is offset by a random number of pages

// when PrimaryEnableRandomOffset is set.

Region->RegionBeg =

(PrimaryBase + (I << Config::Primary::RegionSizeLog)) +

(Config::Primary::EnableRandomOffset

? ((getRandomModN(&Seed, 16) + 1) * PageSize)

: 0);

Region->RandState = getRandomU32(&Seed);

// ...

Region->MemMapInfo.MemMap = ReservedMemory.dispatch(

PrimaryBase + (I << Config::Primary::RegionSizeLog), RegionSize);

CHECK(Region->MemMapInfo.MemMap.isAllocated());

}

shuffle(RegionInfoArray, NumClasses, &Seed);

//...

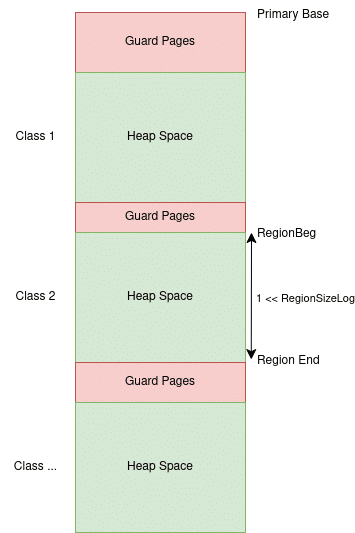

}scudo will begin by reserving all the necessary space (but not commit it) with ReservedMemory.create(). So all of the primary allocator space is contained within the same mapping, and sections of it is are made read/writable, giving the effect of guard-pages.

Classes and Regions

The regions of the primary allocator can be observed in the program mappings (/proc/PID/maps):

...

0x7d826d5000 0x7d82715000 rw-p 40000 0 [anon:scudo:primary]

0x7d82715000 0x7d926d5000 ---p ffc0000 0 [anon_7d82715]

0x7d926d5000 0x7d92715000 rw-p 40000 0 [anon:scudo:primary]

0x7d92715000 0x7db26d7000 ---p 1ffc2000 0 [anon_7d92715]

0x7db26d7000 0x7db2717000 rw-p 40000 0 [anon:scudo:primary]

0x7db2717000 0x7e626ce000 ---p affb7000 0 [anon_7db2717]

0x7e626ce000 0x7e6270e000 rw-p 40000 0 [anon:scudo:primary]

0x7e6270e000 0x7e826ca000 ---p 1ffbc000 0 [anon_7e6270e]

...The regions are only visible when they are "touched", meaning you won’t necessarily see a region until it is used. They are also dynamically resized on the 64-bit version of scudo, meaning the 0x40000 size is the current size of the mapping, but it can grow to 1 << Config::Primary::RegionSizeLog in size (in 0x40000 increments). Half of these entries are the scudo regions, while the other half are guard pages (and uncommitted region space) put in place to mitigate overflows across classes.

The actual region beginning is offset by a random number of pages between 1 and 16:

Region->RegionBeg =

(PrimaryBase + (I << Config::Primary::RegionSizeLog)) +

(Config::Primary::EnableRandomOffset

? ((getRandomModN(&Seed, 16) + 1) * PageSize)

: 0);Each mapping will be at 0x10000000 increments (on android with Config::Primary::RegionSizeLog = 28) in addition to the 1-16 guard pages added.

The following table contains the default values for the size classes in the android version of scudo (size_class_map.h):

| Class ID | Size |

|---|---|

| 1 | 0x20 |

| 2 | 0x30 |

| 3 | 0x40 |

| 4 | 0x50 |

| 5 | 0x60 |

| 6 | 0x70 |

| 7 | 0x90 |

| 8 | 0xb0 |

| 9 | 0xc0 |

| 10 | 0xe0 |

| 11 | 0x120 |

| 12 | 0x160 |

| 13 | 0x1c0 |

| 14 | 0x250 |

| 15 | 0x320 |

| 16 | 0x450 |

| 17 | 0x670 |

| 18 | 0x830 |

| 19 | 0xa10 |

| 20 | 0xc30 |

| 21 | 0x1010 |

| 22 | 0x1210 |

| 23 | 0x1bd0 |

| 24 | 0x2210 |

| 25 | 0x2d90 |

| 26 | 0x3790 |

| 27 | 0x4010 |

| 28 | 0x4810 |

| 29 | 0x5a10 |

| 30 | 0x7310 |

| 31 | 0x8210 |

| 32 | 0x10010 |

Region 0 is reserved for internal allocator use.

The region information is stored in the RegionInfoArray made up of RegionInfo structs.

In scudo version 17 and newer, the region list is shuffled, making the order of the regions random.

Chunk headers

Let us have a look at the chunk metadata stored in scudo chunks.

Due to alignment the chunk metadata does not take up all the bytes it could, but rather takes up 8 bytes, followed by 8 bytes of padding.

The (unpacked) chunk header is defined in chunk.h:

struct UnpackedHeader {

uptr ClassId : 8;

u8 State : 2;

// Origin if State == Allocated, or WasZeroed otherwise.

u8 OriginOrWasZeroed : 2;

uptr SizeOrUnusedBytes : 20;

uptr Offset : 16;

uptr Checksum : 16;

};- ClassId – Is the ID of the class the chunk belongs to.

- state – Is the current state of the block, allocated/freed/quarentined.

- OriginOrWasZeroed – Contains an indication of what type of call allocated the chunk. If the chunk is not allocated the field denotes if the chunk has been zeroed.

- SizeOrUnusedBytes – The requsted size of the chunk, if a reallocation is made on the chunk but the resulting size still fits within the same size class, then only this field is modified.

- Offset – If the chunk does not start at the beginning of a block, what is the offset from the block start to the header (almost always 0).

- Checksum – A two byte integrity checksum.

An example chunk header is printed here:

> dump(malloc(0x103).sub(0x10), 0x10)

0 1 2 3 4 5 6 7 8 9 A B C D E F 0123456789ABCDEF

738426ae60 0b 31 10 00 00 00 43 51 00 00 00 00 00 00 00 00 .1....CQ........Here we see the 0xb class, a state of allocated, and a size of 0x103.

As an exercise to the reader, determine the state, chunk size and classid of the following header:

0 1 2 3 4 5 6 7 8 9 A B C D E F 0123456789ABCDEF

73c42632c0 0f 40 24 00 00 00 26 5f 00 00 00 00 00 00 00 00 .@$...&_........Allocating

The allocate function, called by the malloc wrapper, is defined in combined.h, here are the general steps it takes:

- If initialization has not been done, initialize the allocator for the current thread.

- Adjust the size request to fit alignment.

- Compute the ClassID corresponding to the size.

- Call

TSD->getCache().allocate(ClassId)for the found class.- If the cache allocation fails, the region is exhausted, the allocator will try the next region.

- Manage Memory Tagging Extension (MTE) features, which are currently deactivated on most android systems.

- Call the malloc hook if one is defined.

- Create the chunk header, and return the user pointer.

The general flow can be seen below:

NOINLINE void *allocate(uptr Size, Chunk::Origin Origin,

uptr Alignment = MinAlignment,

bool ZeroContents = false) NO_THREAD_SAFETY_ANALYSIS {

initThreadMaybe();

// ...

// If the requested size happens to be 0 (more common than you might think),

// allocate MinAlignment bytes on top of the header. Then add the extra

// bytes required to fulfill the alignment requirements: we allocate enough

// to be sure that there will be an address in the block that will satisfy

// the alignment.

const uptr NeededSize =

roundUp(Size, MinAlignment) +

((Alignment > MinAlignment) ? Alignment : Chunk::getHeaderSize());

// ...

void *Block = nullptr;

uptr ClassId = 0;

// ...

if (LIKELY(PrimaryT::canAllocate(NeededSize))) {

ClassId = SizeClassMap::getClassIdBySize(NeededSize);

// ...

auto *TSD = TSDRegistry.getTSDAndLock(&UnlockRequired);

TSD->assertLocked(/*BypassCheck=*/!UnlockRequired);

Block = TSD->getCache().allocate(ClassId);

// If the allocation failed, retry in each successively larger class until

// it fits. If it fails to fit in the largest class, fallback to the

// Secondary.

if (UNLIKELY(!Block)) {

while (ClassId < SizeClassMap::LargestClassId && !Block)

Block = TSD->getCache().allocate(++ClassId);

if (!Block)

ClassId = 0;

}

if (UnlockRequired)

TSD->unlock();

}

if (UNLIKELY(ClassId == 0)) {

Block = Secondary.allocate(Options, Size, Alignment, &SecondaryBlockEnd,

FillContents);

}

// ...

const uptr BlockUptr = reinterpret_cast<uptr>(Block);

const uptr UnalignedUserPtr = BlockUptr + Chunk::getHeaderSize();

const uptr UserPtr = roundUp(UnalignedUserPtr, Alignment);

/// ...

return TaggedPtr;

}

Of note in the above snippet is that the size can be zero, and this will result in the smallest possible allocation being returned.

It is also important to note that if a region is full, the next region is used. Meaning the binning is not "strict" as class 2 allocations can land in the class 3 region if the class 2 region is full.

The cache

To speed up allocations, and avoid lock congestion each thread is assigned a TSD. A TSD is a kind of "lock manager" for a local cache, and there is a one-to-one mapping between caches and TSDs (it is not specified what TSD stands for). Multiple threads can share a TSD, and they are assigned to each one in a round-robin fashion:

NOINLINE void initThread(Allocator *Instance) NO_THREAD_SAFETY_ANALYSIS {

initOnceMaybe(Instance);

// Initial context assignment is done in a plain round-robin fashion.

const u32 Index = atomic_fetch_add(&CurrentIndex, 1U, memory_order_relaxed);

setCurrentTSD(&TSDs[Index % NumberOfTSDs]);

Instance->callPostInitCallback();

}The pointer to the assigned TSD is stored in the thread local storage (TLS) of each thread.

The getCache() call on a TSD will then return the SizeClassAllocatorLocalCache (local_cache.h) attached to that TSD.

SizeClassAllocatorLocalCache, are per-TSD collections of free chunks that the primary allocator always draws upon. The cache is essentially a collection of PerClass objects (one for each class as the name suggests), with functions for allocation, deallocation, refilling and draining for them:

struct alignas(SCUDO_CACHE_LINE_SIZE) PerClass {

u16 Count;

u16 MaxCount;

// Note: ClassSize is zero for the transfer batch.

uptr ClassSize;

CompactPtrT Chunks[2 * SizeClassMap::MaxNumCachedHint];

};These are initialized at the same time as the primary allocator:

NOINLINE void initCache() {

for (uptr I = 0; I < NumClasses; I++) {

PerClass *P = &PerClassArray[I];

const uptr Size = SizeClassAllocator::getSizeByClassId(I);

P->MaxCount = static_cast<u16>(2 * getMaxCached(Size));

if (I != BatchClassId) {

P->ClassSize = Size;

} else {

// ClassSize in this struct is only used for malloc/free stats, which

// should only track user allocations, not internal movements.

P->ClassSize = 0;

}

}

}The cache has a different size per class as can be seen above, the getMaxCached() computes the maxCached for a specific size:

static u16 getMaxCachedHint(uptr Size) {

DCHECK_NE(Size, 0);

u32 N;

// Force a 32-bit division if the template parameters allow for it.

if (Config::MaxBytesCachedLog > 31 || Config::MaxSizeLog > 31)

N = static_cast<u32>((1UL << Config::MaxBytesCachedLog) / Size);

else

N = (1U << Config::MaxBytesCachedLog) / static_cast<u32>(Size);

// Note that Config::MaxNumCachedHint is u16 so the result is guaranteed to

// fit in u16.

return static_cast<u16>(Max(1U, Min<u32>(Config::MaxNumCachedHint, N)));

}Here are some examples of the maxCached value for different classes:

| Class ID | Max Cached |

|---|---|

| 1 | 13 |

| 2 | 13 |

| 3 | 13 |

| 4 | 13 |

| … | … |

| 15 | 10 |

| 16 | 7 |

| … | … |

Cache allocation

The function looks like the following:

void *allocate(uptr ClassId) {

DCHECK_LT(ClassId, NumClasses);

PerClass *C = &PerClassArray[ClassId]; // [1]

if (C->Count == 0) {

if (UNLIKELY(!refill(C, ClassId))) // [2]

return nullptr;

DCHECK_GT(C->Count, 0);

}

// ...

CompactPtrT CompactP = C->Chunks[--C->Count]; // [3]

// ... Updating stats ...

return Allocator->decompactPtr(ClassId, CompactP);

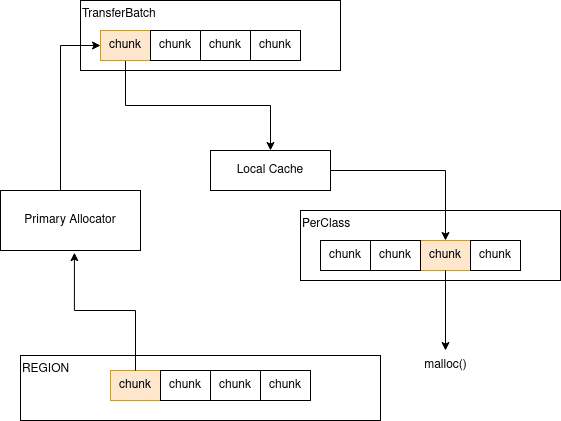

}It retrieves the PerClass structure at [1] and checks the cache for chunks. Assuming there are chunks to fetch, it will pop one at [3] and return it. If it has no chunks it will call refill [2] to try and refill the local cache.

Finally Allocator->decompactPtr decompacts the pointers that are internally used by scudo. These are 32-bit representations of pointers based on offsets from a region’s base:

// Defines the type and scale of a compact pointer. A compact pointer can

// be understood as the offset of a pointer within the region it belongs

// to, in increments of a power-of-2 scale.

// eg: Ptr = Base + (CompactPtr << Scale).If the refilling of the PerClass array fails, this means that the region is exhausted. No chunk is returned, and it is up to the logic in the combined allocator to decide what to do. Recall that the combined allocator will move to the next class if the cache allocation fails.

This means that given the primitive to allocate enough, it is possible to make malloc(0x10) (normally class 1), return a pointer to the class 2 region:

Allocated class 0x0b chunk :

0 1 2 3 4 5 6 7 8 9 A B C D E F

7647f11180 0b 01 10 00 00 00 90 3c 00 00 00 00 00 00 00 00

Proceeding to allocate a lot of class 0x0b chunks...

Now we are in class 0x0c, after 931156 allocations:

0 1 2 3 4 5 6 7 8 9 A B C D E F

7657f0f680 0c 01 10 00 00 00 75 96 00 00 00 00 00 00 00 00Cache refill

Refilling a local cache is a way of requesting more chunks from the primary allocator. If the local cache instance is out of free chunks for the requested size, refill is called:

NOINLINE bool refill(PerClass *C, uptr ClassId) {

initCacheMaybe(C);

TransferBatch *B = Allocator->popBatch(this, ClassId);

if (UNLIKELY(!B))

return false;

DCHECK_GT(B->getCount(), 0);

C->Count = B->getCount();

B->copyToArray(C->Chunks);

B->clear();

destroyBatch(ClassId, B);

return true;

}popBatch is called on the allocator (primary32/64) popping a TransferBatch from the region’s BatchList. If the list is empty, an attempt is made to refill it by creating new TransferBatch objects from the region, expanding the mapping if necessary.

TransferBatch

A TransferBatch efficiently stores batches of free chunks for a designated region in a compact format. Its primary function is to swiftly transfer and replenish the cache. The TransferBatch can accommodate up to MaxNumCached chunks, which is half the maximum capacity of a PerClass list. This design ensures that there is always available space in the cache to hold any newly freed chunks from the program.

The journey of a chunk can be seen here:

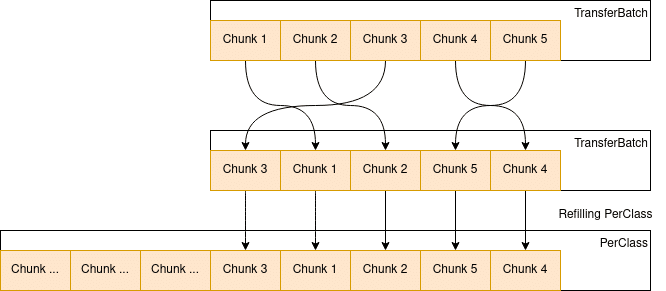

Creating new TransferBatch objects and refilling the Primary allocator is done in populateFreeListAndPopBatch, the number of batches prepared depends on the class size:

const u32 NumberOfBlocks =

Min(MaxNumBatches * MaxCount, // [1]

static_cast<u32>((Region->MemMapInfo.MappedUser -

Region->MemMapInfo.AllocatedUser) /

Size));

DCHECK_GT(NumberOfBlocks, 0);

constexpr u32 ShuffleArraySize =

MaxNumBatches * TransferBatch::MaxNumCached;

CompactPtrT ShuffleArray[ShuffleArraySize]; // [2]

DCHECK_LE(NumberOfBlocks, ShuffleArraySize);

const uptr CompactPtrBase = getCompactPtrBaseByClassId(ClassId);

uptr P = RegionBeg + Region->MemMapInfo.AllocatedUser;

for (u32 I = 0; I < NumberOfBlocks; I++, P += Size)

ShuffleArray[I] = compactPtrInternal(CompactPtrBase, P); // [3]

// ...

// [4]

shuffle(ShuffleArray + NumberOfBlocks - N, N, &Region->RandState);

pushBlocksImpl(C, ClassId, Region, &ShuffleArray[NumberOfBlocks - N], N,

/*SameGroup=*/true);

TransferBatch *B = popBatchImpl(C, ClassId, Region); // [5]

- First the number of blocks to prepare in batches is calculated, as either the

MaxNumBatches * MaxCountor the rest of the region, whichever is smaller. [1] - An array of compacted pointers are then prepared, this will be the transfer batches. [2]

- The compacted pointers to the region space are then written into the array. [3]

- The array is shuffled, and pushed into the freelist of the primary allocator. [4]

- Finally a transferbatch is popped and returned [5]

The shuffling here results in the randomization of chunk allocations seen from the malloc() callers perspective. This is because the TransferBatch object copies chunks into the localcache PerClass array by using a single memcpy:

template <class SizeClassAllocator> struct TransferBatch {

// ...

void clear() { Count = 0; }

void moveToArray(CompactPtrT *Array) {

memcpy(Array, Batch, sizeof(Batch[0]) * Count);

clear();

}

// ...

private:

CompactPtrT Batch[MaxNumCached];

u16 Count;

};This is called in the refill function, meaning the internal order of chunks in a TransferBatch is preserved into the localcache. Shuffling therefore only happens when a TransferBatch is refilled.

Freeing

Freeing a chunk in scudo is relatively simple:

- If initialization has not been done, initialize a minimal config

- The free hook is called.

- Checks if the chunk is allocated – throws error if not (double free).

- calls

quarantineOrDeallocateChunk.

As the rather descriptive name suggests, quarantineOrDeallocateChunk will either put the chunk into the quarantine, or push it to the local cache freelist. In the case of a disabled or bypassed quarantine, the function is essentially performing this on the local cache PerClass:

const bool NeedToDrainCache = C->Count == C->MaxCount;

if (NeedToDrainCache)

drain(C, ClassId);

C->Chunks[C->Count++] =

Allocator->compactPtr(ClassId, reinterpret_cast<uptr>(P));It is essentially the opposite of the allocate function except when the cache is full, in which case it is drained.

Draining the Cache

Draining a cache is the act of releasing memory from a PerClass, back into the primary allocator. This is done whenever a local cache freelist is full. As can be seen below, half of the PerClass array is drained.

NOINLINE void drain(PerClass *C, uptr ClassId) {

const u16 Count = Min(static_cast<u16>(C->MaxCount / 2), C->Count);

Allocator->pushBlocks(this, ClassId, &C->Chunks[0], Count);

// u16 will be promoted to int by arithmetic type conversion.

C->Count = static_cast<u16>(C->Count - Count);

for (u16 I = 0; I < C->Count; I++)

C->Chunks[I] = C->Chunks[I + Count];

}

Here the chunks are pushed back to the primary allocator using TransferBatch objects as well.

Header checksum

The checksum is by default a CRC32 checksum, but scudo also supports a bsd checksum. The implementations of the checksum computation function can be found in computeChecksum:

u32 Crc = static_cast<u32>(CRC32_INTRINSIC(Seed, Value));

for (uptr I = 0; I < ArraySize; I++)

Crc = static_cast<u32>(CRC32_INTRINSIC(Crc, Array[I]));

return static_cast<u16>(Crc ^ (Crc >> 16));The CRC32_INTRINSIC uses processor specific instructions to speed up CRC computation.

Since the checksum uses only 2 bytes, so the 4 byte output of CRC32 is truncated by xor’ing the upper 2 bytes with the lower 2.

The checksum is only checked for validity when the chunk is free’d, reallocated, or malloc_usable_size is called on the pointer:

[SM G980F::alloc_playground ]->let source = malloc(0x60)

let alloc = null

for (let i = 0; i < 0x40; i++) {

alloc = malloc(0x60)

if (alloc.sub(0x70).equals(source)) {

break

}

}

var byteArray = new Array(0xc0).fill(0x41)

Memory.writeByteArray(source, byteArray) // No crash

free(alloc) // Crash

Error: abort was called

at <eval> (<input>:17)The Quarantine

A quarantine is also part of the scudo allocator, but as previously mentioned it is turned off.

The documentation has the following to say about the quarantine:

[The quarantine] offers a way to delay the deallocation operations, preventing blocks to be immediately available for reuse. Blocks held will be recycled once certain size criteria are reached. This is essentially a delayed freelist which can help mitigate some use-after-free situations. This feature is fairly costly in terms of performance and memory footprint, is mostly controlled by runtime options and is disabled by default

This gives a suggestion to the reason for it being disabled, given its performance implications.

Essentially, the quarantine will hold chunks for a certain time after they have been freed, making them unavailable for reallocation until the quarantine is full, in which case it will recycle the chunks in it back to the primary allocator.

Because of the complexity of the quarantine, and the fact that it is disabled by default, we will defer discussing it until it is introduced.

So what does it mean for exploits?

First off it means that exploitation is harder, quite a bit harder. Better primitives are needed to exploit the same bugs.

Binning

One immediate effect of the binned allocations is that allocations of different sizes will never exist next to each other:

Size 0x20 alloc at 0x7b36c841f0

Size 0x90 alloc at 0x7b96c84320

Size 0x20 alloc at 0x7b36c84130

Size 0x90 alloc at 0x7b96c836c0

Size 0x20 alloc at 0x7b36c84310

Size 0x90 alloc at 0x7b96c83cf0

Size 0x20 alloc at 0x7b36c848b0

Size 0x90 alloc at 0x7b96c82dd0For example a buffer overflow targeting a function pointer in an object of a different size than the overflown one will not work. While this is not a new concept, it will be a new concept to people only familiar with dlmalloc and derivatives.

State

The allocator keeps a state field in the header, indicating whether the chunk is allocated or free, checking this field upon freeing and allocating removes the possibility for double frees –

unless a primitive allows to overwrite a header and maintain a correct checksum. That is a topic for a different post.

Randomization

This is a big hurdle when attacking scudo, in practice it means that you cannot know where chunks land in relation to each-other. Two malloc‘ed chunks will not be placed next to each other if the freelist is empty (unlike in dlmalloc).

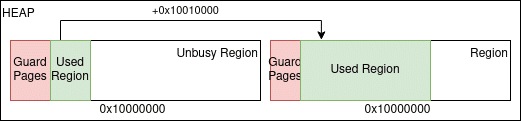

The randomization of the order of regions makes cross region attacks hard as guessing the offset between specific regions becomes infeasible. We can still target cross regions, but we will not know what region we land in. Given a leak from an unbusy class, we can hit the next bin up by adding 0x10010000 (next region offset plus 16 pages). This is guaranteed to be a valid pointer to the next class.

If the class is not busy, the used region space will be smaller. Thus it used to generally hold that a heap pointer added with 0x10010000 will always hit the next class if the targeted class is busier (has more used space), than the region of the leaked pointer.

This is of course no longer the case as our leaked pointer could be in the very last region, meaning we would hit invalid memory. It is also hard to tell the business of the adjacent regions, if we do not know what class they belong to.

Determinism in local cache

In scudo a sequence of malloc and free will be deterministic insofar as the sequence is shorter than the PerClass->MaxCount. Since when chunks are drained, they might be used to refill a different thread’s cache.

malloc(0x50): 0x6e8c85d8b0

malloc(0x50): 0x6e8ca1df90

malloc(0x50): 0x6e8c8b8690

malloc(0x50): 0x6e8c79af10

malloc(0x50): 0x6e8c944550

malloc(0x50): 0x6e8c9ea210

FREEING

malloc(0x50): 0x6e8c85d8b0

malloc(0x50): 0x6e8ca1df90

malloc(0x50): 0x6e8c8b8690

malloc(0x50): 0x6e8c79af10

malloc(0x50): 0x6e8c944550

malloc(0x50): 0x6e8c9ea210Chunk header checksums

Unlike its older brother jemalloc, scudo does keep heap metadata on the heap, and while this usually means more corruption targets, the checksumming makes it difficult to attack the allocator itself.

There are a few ways to defeat the checksums when using an overflow:

- Skipping the header, if your overflow primitive is good enough, skipping past the header would defeat the mitigation.

- Leaking, given a linear out-of-bounds read (OOB), one could write the leaked header back.

- Avoiding a free(), if corrupted objects are never freed the corruption will not be detected.

- Recomputing checksum, with a leak of a header one could recover the random seed used for checksums. Retrieving a valid seed is relatively time-consuming.

Conclusions

The scudo allocator is simple but proves effective at hardening the heap against attacks. It succeeds in increasing the resources needed to find primitives that defeat its different mitigations, advancing your overall cybersecurity.

In the next post, we’ll go deeper into offensive cybersecurity and provide more training. We’ll look at tooling around scudo and present a practical exploit against the allocator.

Resources

[1] https://un1fuzz.github.io/articles/scudo_internals.html

[2] https://trenchant.io/scudo-hardened-allocator-unofficial-internals-documentation/

[3] https://www.synacktiv.com/publications/behind-the-shield-unmasking-scudos-defenses